AIMLModelSTTLLMGoogleWhat is FLAN-T5? Is FLAN-T5 a better alternative to GPT-3?John Jacob

John Jacob

9 min read

John Jacob

Artificial Intelligence (AI) has been making strides in recent times, with advancements in Large Language Models (LLMs) and their applications taking much of the credit. One of the most prominent models in this domain is GPT-3, developed by OpenAI. However, it is not the only model making waves. FLAN-T5, developed by Google Research, has been getting a lot of eyes on it as a potential alternative to GPT-3.

- FLAN stands for “Fine-tuned LAnguage Net”

- T-5 stands for “Text-To-Text Transfer Transformer”

Back in 2019, Google's first published a paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer", introducing a novel where they introduced the original T5 architecture. The pretrained encoder-decoder model worked well on multiple tasks, and particlarly well suited for translation and summarization tasks. In 2022 Google followed up with a paper titled "Scaling Instruction-Finetuned Language Models". Alongside the paper, they released a host of updated "FLAN-T5" model checkpoints, and also released the results of this finetuning tehcnique on their PaLM model under the name of FLAN-PaLM. These were "instruction finetuned" on more than 1,800 language tasks, with significantly improved reasoning skills and promptability. From the HuggingFace model card's convenient TLDR:

"If you already know T5, FLAN-T5 is just better at everything. For the same number of parameters, these models have been fine-tuned on more than 1000 additional tasks covering also more languages"

It performs well on a wide range of natural language processing tasks, including language translation, text classification, and question answering. The model is known for its speed and efficiency, making it an attractive option for real-time applications. Additionally, FLAN-T5 is designed to be highly customizable, allowing developers to fine-tune it to meet their specific needs. With its advanced features and high performance, FLAN-T5 is poised to become a major player in the field of natural language processing. You can read more about Google's instruction finetuning strategy below, where we have discussed it in greater depth.

How it works?

FLAN-T5 model is a encoder-decoder model that has been pre-trained on a multi-task mixture of unsupervised and supervised tasks and for which each task is converted into a text-to-text format.

During the training phase, FLAN-T5 was fed a large corpus of text data and was trained to predict missing words in an input text via a fill in the blank style objective. This process is repeated multiple times until the model has learned to generate text that is similar to the input data.

Once trained, FLAN-T5 can be used to perform a variety of NLP tasks, such as text generation, language translation, sentiment analysis, and text classification.

Solving Tasks and Prompting:

A standard technique to solve tasks using language models is via prompting. Popular types of prompting - Zero-shot, One-shot and Few-shot prompting.

Zero-shot prompting refers to a scenario where a language model is tested on a task it has never seen before, without any fine-tuning or training data specific to that task. In this scenario, the model relies on its pre-trained knowledge to make predictions.

One-shot prompting involves prompting the language model with just one example of the task. The model is fed in a single sample, and its performance is evaluated on a set of similar examples.

Few-shot prompting refers to a situation where a language model is fed in with a small number of examples of the task with example input/output pairs. The model uses these examples to understand the structure of the task and prompt, and uses it to better understand how to respond to the given input.

These three prompting techniques provide different levels of contextual information and instruction, enabling researchers to evaluate the versatility and generalization capabilities of language models.

The Scaling Instruction-Finetuned Language Models paper:

Ref: Paper: https://arxiv.org/abs/2210.11416 | https://arxiv.org/pdf/2210.11416.pdf

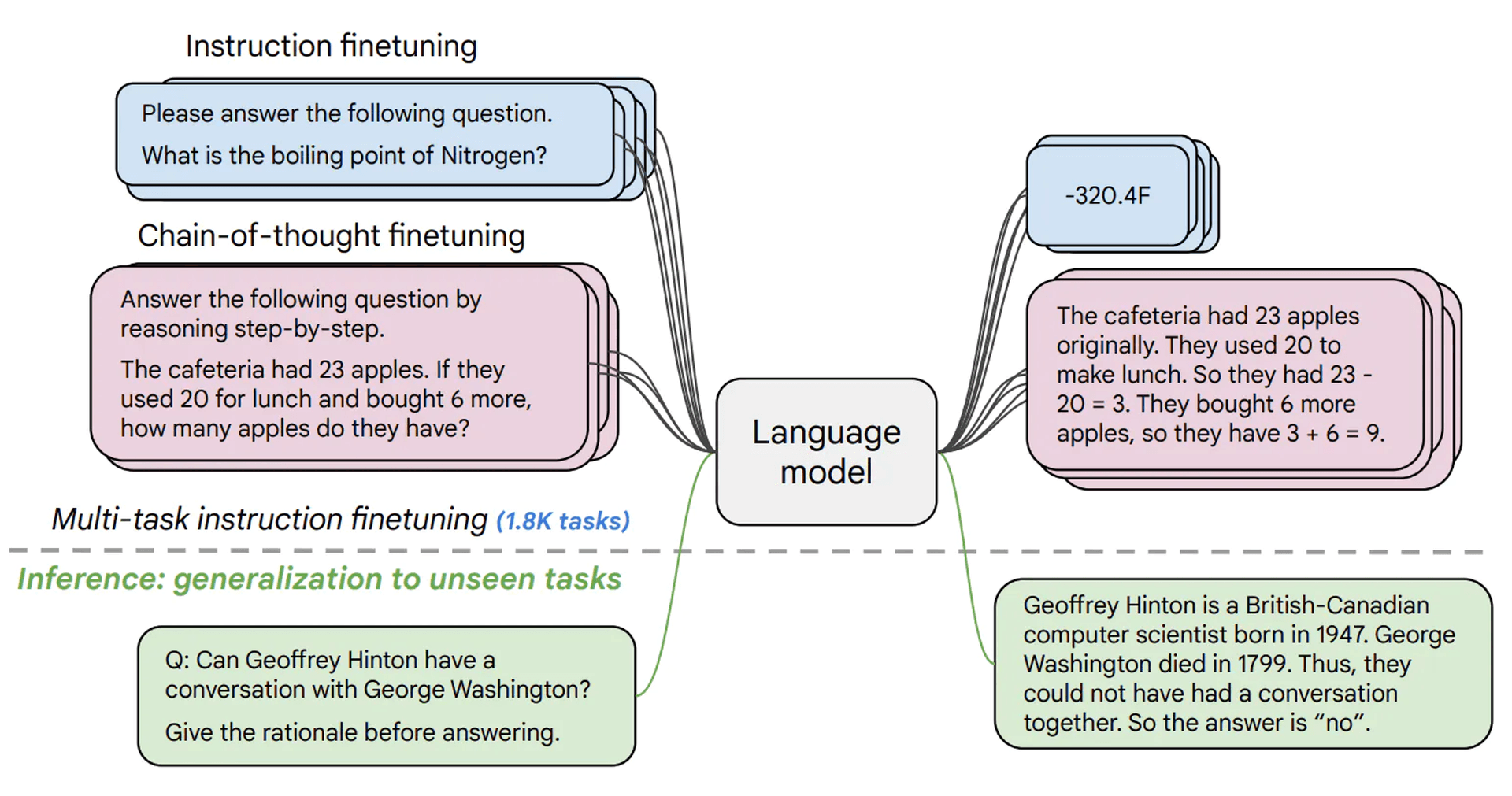

The Scaling Instruction-Finetuned Language Models paper examines the technique of instruction finetuning, paying close attention to scaling the number of tasks, increasing the model size, and fine-tuning on chain-of-thought data. The findings of the paper demonstrate that instruction finetuning is an effective way to enhance the performance and functionality of pre-trained language models.

Instruction finetuning:

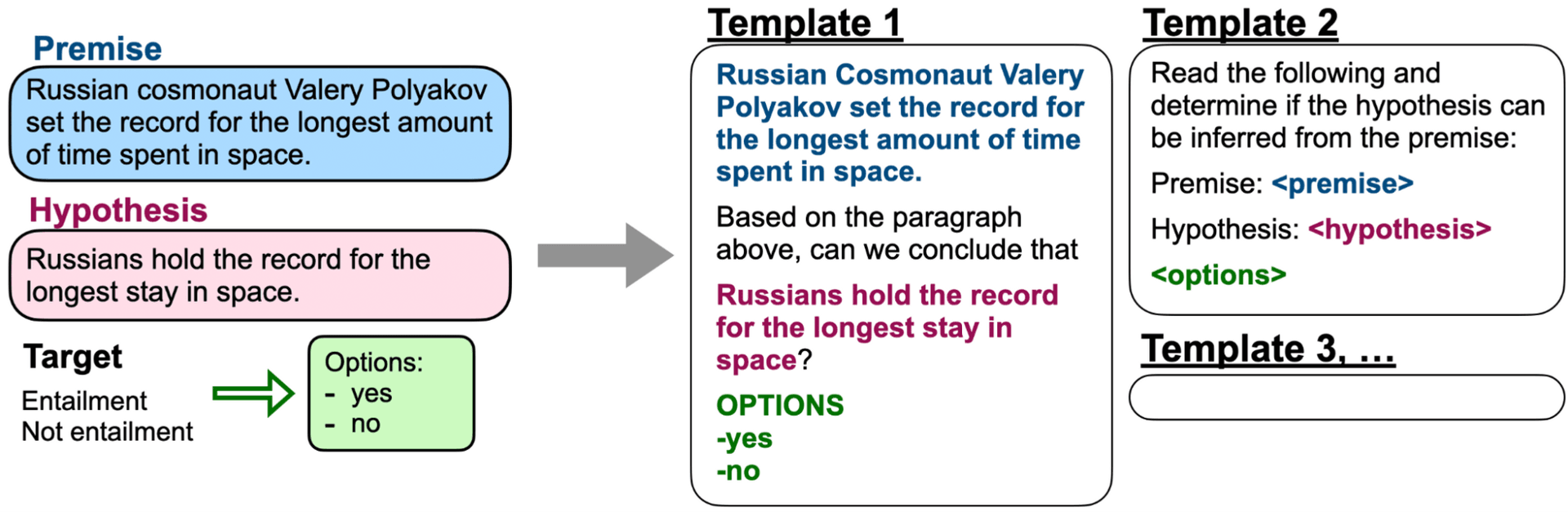

Example templates for a natural learning inference dataset.

Ref: https://ai.googleblog.com/2021/10/introducing-flan-more-generalizable.html

Instruction fine-tuning is a technique that fine-tunes a language model to increase its versatility in handling NLP tasks, rather than training it for a specific task. The instruction tuning phase of FLAN required a limited amount of updates compared to the substantial computation involved in pre-training, making it a secondary aspect to the main pre-training process. This enables FLAN to perform efficiently on a diverse set of unseen tasks.

Training FLAN on these instructions not only improves its ability to solve the specific instructions it has seen during training but also enhances its capability to follow instructions in a general manner. To reduce the time and resources needed to generate a new set of instructions, templates were utilized to convert existing datasets into an instructional format.

The results of the study indicate that FLAN, after undergoing training on these instructions, performs exceptionally well in completing specific instructions, as well as demonstrates a strong proficiency in following instructions in a general sense.

Would you like to try it?

Google’s Flan-T5 is available via 5 pre-trained chekpoints:

- Flan-T5 small

- Flan-T5-base

- Flan-T5-large

- Flan-T5-XL

- Flan-T5 XXL

See the list of available pre-trained T5 models, here.

Try out here at:

What are a few Use-cases?

FLAN-T5 has a few potential use-cases:

- Text Generation: FLAN-T5 can be used to generate text based on a prompt or input. This is ideal for content creation and creative writing including writing fiction, poetry, news articles, or product descriptions. The model can be fine-tuned for specific writing styles or genres to improve the quality of the output.

- Text Classification: FLAN-T5 can be used to classify text into different categories, such as spam or non-spam, positive or negative, or topics such as politics, sports, or entertainment. This can be useful for a variety of applications, such as content moderation, customer support, or personalized recommendations.

- Text Summarization: FLAN-T5 can be fine-tuned to generate concise summaries of long articles and documents, making it ideal for news aggregation and information retrieval.

- Sentiment Analysis: FLAN-T5 can be used to analyze the sentiment of text, such as online reviews, news articles, or social media posts. This can help businesses to understand how their products or services are being received, and to make informed decisions based on this data.

- Question-Answering: FLAN-T5 can be fine-tuned to answer questions in a conversational manner, making it ideal for customer service and support.

- Translation: FLAN-T5 can be fine-tuned to perform machine translation, making it ideal for multilingual content creation and localization.

- Chatbots and Conversational AI: FLAN-T5 can be used to create conversational AI systems that can respond to user input in a natural and engaging manner. The model can be trained to handle a wide range of topics and respond in a conversational tone that is appropriate for the target audience.

Are there any limitations/drawbacks?

FLAN-T5 also has some drawbacks that limit its effectiveness in certain applications. Here are some of the key limitations of FLAN-T5:

- Data bias: One of the major limitations of FLAN-T5 is its data bias. The model is trained on large amounts of text data and may inherit the biases present in that data. This can result in incorrect outputs and can even perpetuate harmful stereotypes.

- Resource Intensive: FLAN-T5 requires a large amount of computational power and memory to run, which makes it difficult for smaller companies or individual developers to use it effectively. This can limit the potential applications for FLAN-T5, especially in low-resource environments.

- Unreliable Output: FLAN-T5, like other language models, can sometimes generate unreliable outputs, especially when it is presented with new or unusual inputs. This can make it difficult to use the model in real-world applications where accuracy is critical.

- Training time: Training FLAN-T5 models requires a large amount of computational resources and takes a considerable amount of time. This can make it challenging to quickly implement new models and test different configurations.

Is Google’s Flan-T5 a better alternative to OpenAI’s GPT-3?

Google’s FLAN-T5 has certain advantages over OpenAI's GPT-3. However, it is important to note that both models have their own strengths and weaknesses, and the superiority of one over the other largely depends on the specific task or application.

Here are a few reasons why FLAN-T5 might be considered a better alternative to GPT-3:

Open Source Model Checkpoints: Unlike OpenAI's GPT 3, FLAN-T5 is an open source LLM, with pretrained model weights or checkpoints released to the public. This enables a higher level of customizability, research and opportunities to optimize its usage than is possible with GPT-3.

Efficient Training: FLAN-T5 is designed to be more computationally efficient to run compared to GPT-3 as well as the original T5, which means that it can be trained faster and with smaller checkpoints. This is an advantage for researchers and developers who want to experiment with the model, as well as for businesses who want to implement it in their applications.

Better Performance on Certain Tasks: FLAN-T5 is specifically designed to perform well on a range of language tasks, including machine translation, summarization, and text classification. This means that it may be more effective than GPT-3 on these specific tasks.

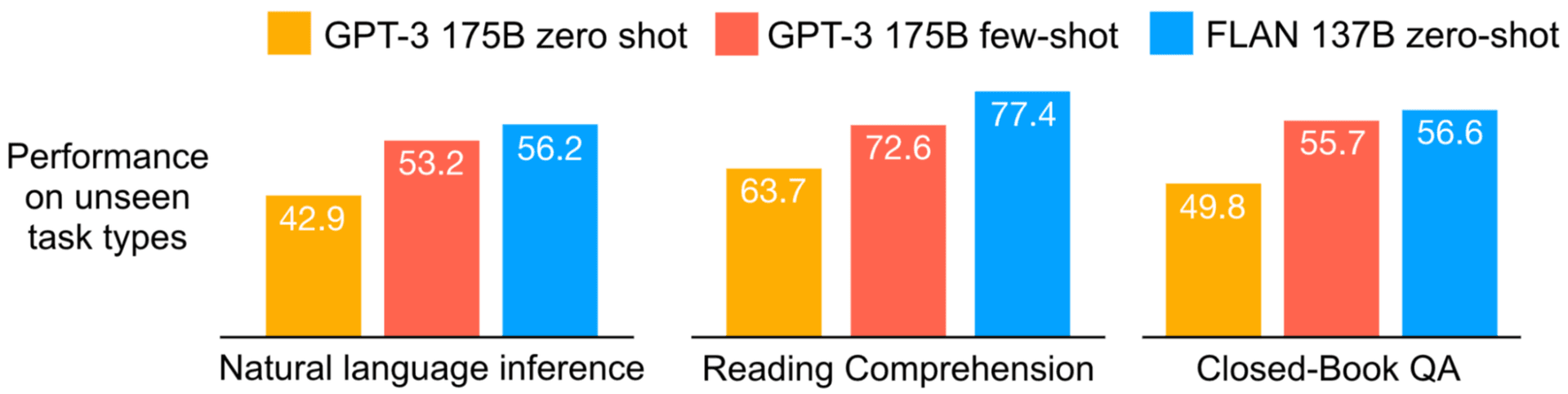

As per Google’s blog, The performance of FLAN was assessed on 25 tasks and it was discovered that the model outperformed zero-shot prompting in 21 tasks. Furthermore, the results of FLAN were found to be superior to zero-shot GPT-3 on 20 out of 25 tasks, and even outperformed few-shot GPT-3 on certain tasks.

Source: https://ai.googleblog.com/2021/10/introducing-flan-more-generalizable.html

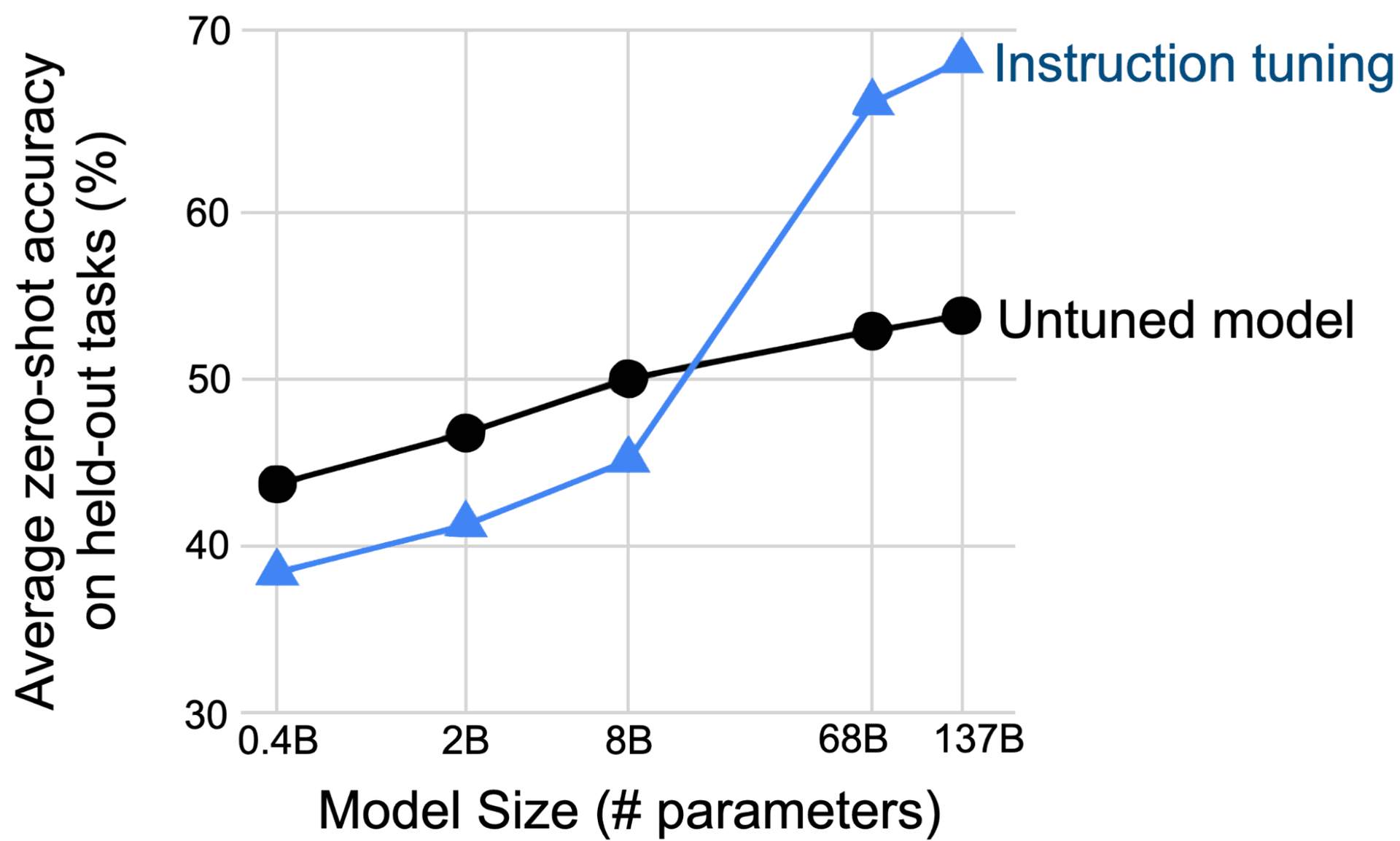

Also, the findings indicate that model size plays a crucial role in determining the effectiveness of instruction tuning in FLAN. At smaller scales, the performance of FLAN was observed to decline, whereas only at larger scales did the model show the ability to generalize the instructions in the training data to unseen tasks. This could be due to the fact that smaller models lack the necessary parameters to perform a diverse range of tasks.

Source: https://ai.googleblog.com/2021/10/introducing-flan-more-generalizable.html

Easy Customization: FLAN-T5 is designed to be highly customizable, meaning that researchers and developers can fine-tune the model to perform specific tasks and domains. This is an advantage over GPT-3, which is a pre-trained model with fixed capabilities.

Lower Cost: As FLAN-T5 requires fewer computational resources to train compared to GPT-3, it is likely to be more cost-effective. This is an advantage for businesses who want to implement the model in their applications without incurring high costs.

In Conclusion

While both FLAN-T5 and GPT-3 model have their own strengths and weaknesses, and the superiority of one over the other largely depends on the specific task or application including computational efficiency, performance, cost-effectiveness, and ease of customization. Additionally, AI continues to evolve with tools like AI answer generator that make it easy to obtain accurate answers or solutions by simply inputting a query.

Overall, FLAN-T5 offers a powerful and flexible solution for natural language processing and language generation tasks for businesses, developers, and researchers who are looking for a more affordable and accessible option.

If you want to see how we've utilized LLMs in the conversational space, don't forget to subscribe to notifications for our Intelligent Reports for Audio/Video Product Hunt page. Our AI-powered system can turn any conversation or meeting into a valuable resource!

Check out our other blogs for more interesting content.