STTASRTRANSCRIPTMODELOpenAI Whisper - Intro & Running It YourselfJohn Jacob, Shantanu Nair

John Jacob, Shantanu Nair

5 min read

John Jacob, Shantanu Nair

Introduction

Recently, OpenAI, the company behind GPT and DALL-E, has open-sourced their new automatic speech recognition (ASR) model, Whisper, which is a multilingual, multitasking system that is approaching human level performance. Whisper performs quite well across many languages and diverse accents, with their accompanying paper detailing several new and interesting ideas and techniques behind their training and dataset building strategy. Uniquely, Whisper supports transcription in multiple languages, as well as translation from those languages into English. OpenAI details that the model was trained on 680,000 hours of supervised data—that's like listening to over 70 years of continuous audio! They claim it achieves human level robustness and accuracy when run on English speech.

Whisper Architecture

For the model architecture, OpenAI utilizes a rather vanilla transformer-based encoder-decoder model. Introduced by Vaswani et al. in the now famous Attention is all you need paper****, this architecture, which has been responsible for a majority of our recent breakthroughs in the field of NLP, is now pushing its weight in the ASR space.

In their approach, the Input audio is divided into short 30-second blocks, which are then converted into a log-Mel spectrogram block, and then moves on to be passed into an encoder block, where the spectrogram is processed. The decoder takes care of multiple tasks on its own, such as language identification, phrase-level timestamps, multilingual speech transcription, and to-English speech translation. By utilizing a custom set of special tokens, the decoder can decide which particular task it is to work on. The decoder, being an audio-conditional language model, is also trained to use the history of text of the transcript with the aim of providing it some context and to help deal with ambiguous audio.

Images Source: OpenAI Blog

OpenAI states that while several other models out there which have been trained with a focus on performing well on particular datasets or test sets, with Whisper, they have instead shifted their focus to pretraining in a supervised fashion using larger datasets created by combining scraped data and filtering through large compiled datasets, filtering out usable data using clever heuristics. These models tend to be more robust and generalize much more effectively for real-world use cases. While you may see that some models outperform Whisper on individual test sets, by scaling their weakly supervised pretraining, the OpenAI team has managed to achieve very impressive results without the use of self-supervision and self-training techniques typically utilized by current State of the Art ASR models.

About a third of the dataset used for training was non-English. Their training of Whisper involved switching between two tasks—the task of either transcribing the audio in its original language or the task of translating it to English. The OpenAI team found this training style to be an effective technique for training Whisper to learn speech to text translation, and resulted in it outperforming the supervised training methods employed by current state-of-the-art models, when tested on the CoVoST2 multilingual corpus for English translation. Image Source: OpenAI Blog

With a transcription model like Whisper, we are able to communicate with one and all better, regardless of the language barriers in our world. This could make the world a smaller place, and could enable us to collaborate with each other, and try to work together in order to solve problems.

Languages and Models

As you can see in the below figure, there are five model sizes, with four of them (tiny, base, small, and medium) being English-only versions (.en models)

| Size | Parameters | English-only model | Multilingual model | Required VRAM | Relative speed |

|---|---|---|---|---|---|

| tiny | 39 M | tiny.en | tiny | ~1 GB | ~32x |

| base | 74 M | base.en | base | ~1 GB | ~16x |

| small | 244 M | small.en | small | ~2 GB | ~6x |

| medium | 769 M | medium.en | medium | ~5 GB | ~2x |

| large | 1550 M | N/A | large | ~10 GB | 1x |

Table Source: Whisper Github Readme Here, you can see a WER breakdown by language (Fleurs dataset), using the large model, created from the data provided in the paper and compiled into a neat visualization by AssemblyAI.

Image Source: AssemblyAI Blog, Data Source: OpenAI Paper

Trying out Whisper yourself

- Run whisper in your terminal

- Run whisper in Python

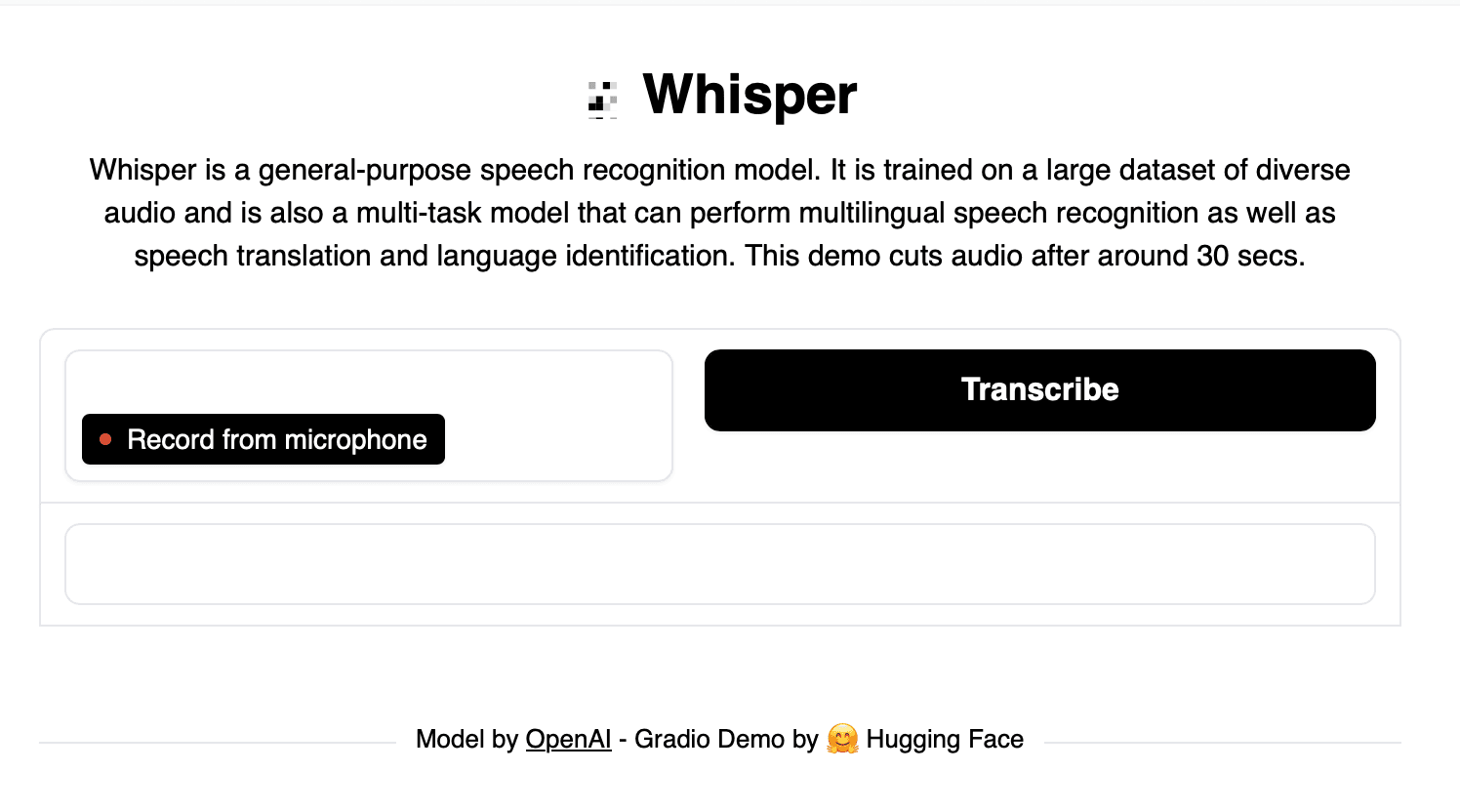

- Run the Whisper demo using Gradio Spaces

Running whisper in your terminal Let’s learn how to install and use Whisper using your terminal.

- Install DependenciesWhisper requires the codebase to be compatible with Python 3.7+ and latest versions of PyTorch. Python 3.9.9 and PyTorch 1.10.1 was used to test and train the model. Install Python and PyTorch, if haven’t already.Whisper also requires 'ffmpeg' to be installed on your system. FFmpeg is a media processing library, used for reading audio and video files. Please use below commands and install it:

1# On Linux2sudo apt update && sudo apt install ffmpeg3 4# On MacOS via brew5brew install ffmpeg- Install Whisper Install Whisper by using the command line and executing the below command:

1pip install git+https://github.com/openai/whisper.git- Run Whisper Navigate to the directory where your audio file is located. For this example, we will generate transcripts for a file

test.wavby running the following command:

1whisper test.wavThe output will be displayed in the terminal:

1$ whisper test.wav2Detecting language using up to the first 30 seconds. Use `--language` to specify the language3Detected language: english4[00:00.000 --> 00:22.000] That's all good. So currently our onboarding process is pretty intensive. It's very much involved with the customer success managers doing a very close handover.Running whisper in Python Using Whisper for transcription in Python is super easy. The OpenAI team has provided extra examples and some more instructions on their Github model repo page, but here’s a quick Python snippet that should get you running and help you get started playing with the model.

1import whisper2 3model = whisper.load_model("base")4result = model.transcribe("test.wav")5print(result["text"])You should get your audio’s transcription:

1"That's all good. So currently our onboarding process is pretty intensive. It's very much involved with the customer success managers doing a very close handover."Explore the result object to see the segments detected—whisper returns phrase-level timestamps, as well as the language detected, in this case “en” for English.

Try out Whisper using Gradio Spaces by Hugging Face

Hugging Face’s Spaces feature allows you to deploy and play with interactive demos of your models a breeze. Visit this Space to go ahead and jump right in to play around with the Whisper Model in a demo created using Hugging Face’s Gradio SDK.

Conclusion

OpenAI’s Whisper is one of the best automatic speech recognition models available today in terms of robustness to noise, and demonstrates how far focusing on scaling collecting training data with a simple architecture can take you. Whisper would be a widely used tool between researchers and developers, for its high accuracy and open-sourced options, and can give way for especially developers to create interfaces to a variety of applications.

However, Whisper currently does not provide word-level timestamps or accurate phrase-level timestamps. We at Exemplary are exploring a viable solution to add word-level timestamps for Whisper before adding making it available for use through our Unified API.